The Alienating Affect of Effective Altruism

Thoughts on Doing Good Better and The Precipice

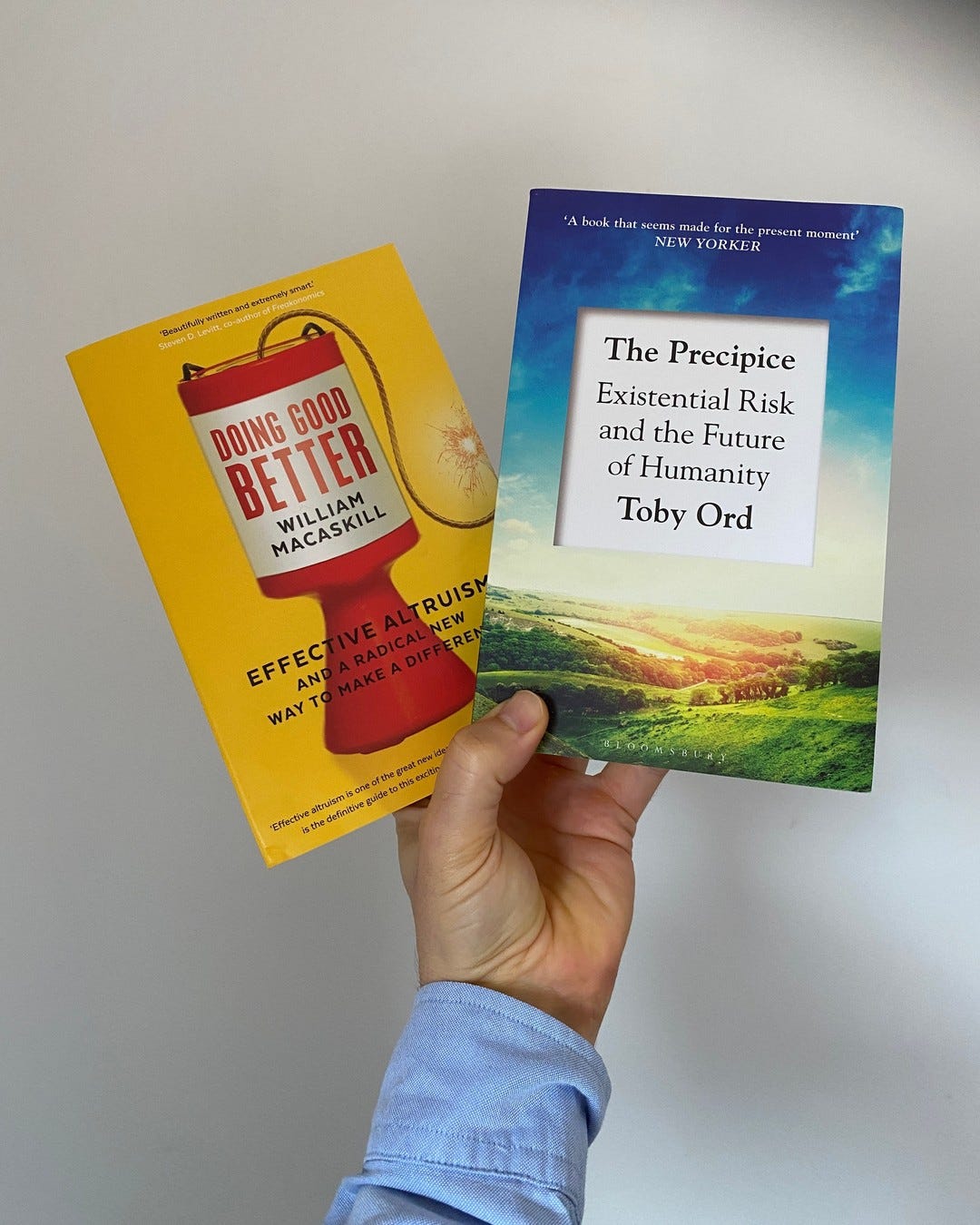

I love a free book and have copies of The Bible, the Bhagavad Gita, and The Book of Mormon thanks to the efforts of evangelists. Into this collection goes two books from the founders of the Effective Altruism (EA) movement: Doing Good Better (2016) by William MacAskill, gifted via EA Edinburgh, and The Precipice (2020) by Toby Ord, courtesy of the 80,000 Hours website, an off-shoot of EA that encourages people to do the most consequential work possible.

What I especially like about receiving free books from Effective Altruists is that I get to imagine them doing a cost-benefit analysis of giving away books versus, say, spending that money on a Facebook ad campaign. The latter may be worthwhile if you are a traditional charity, but the EA movement has a religious quality1 that turns adherents into missionaries. They don’t want to guilt-trip you into donating, they want to change your mental operating system. Though, unlike most religions, they encourage criticism and are currently running a competition where you can win up to $100,000 by writing an essay ‘red-teaming’ the movement.

What is Effective Altruism?

EA is, essentially, a combination of moral philosophy and economics. Moral philosophy is concerned with doing good but doesn’t care much about efficiency. Economics is all about efficiencies but is indifferent to suffering. EA allows you to be virtuous and smart.

EA assesses actions in the world according to how much good they will do, with ‘good' being quantified through things like Quality-Adjusted Life Years (QALY) gained. For instance, EA encourages you to calculate whether it is better to spend $40,000 training one guide dog in the US or curing 1,000 Africans of river blindness.

The origins of Effective Altruism

EA is a practical movement and its official history doesn’t get bogged down in intellectual genealogy. They skate over the influence of Peter Singer and Derek Parfit (Toby Ord’s doctoral advisor), whose utilitarian thought experiments have woken many people out of their moral slumber, and instead focus on the institutions they’ve created.

The story William MacAskill tells in Doing Good Better is that EA is a necessary corrective to the failure of modern charity, with the classic example being the PlayPump. Instead, you should fund things like buying malaria nets, which cost $10 and could ‘save a life.’2 Or, rather than buying books for African kids, deworm them so they can actually get to school in the first place. Check out GiveWell for more examples of effective-but-underfunded charities.

If EA had stopped there and spent their time helping improve the lives of the poorest humans on the planet, I think they would be hailed as heroes. But they didn’t stop there.

Expanding the circle of empathy

In his TED talk, Peter Singer argues that morally speaking there is no difference between a child dying in front of you and a child dying on another continent. If you would ruin a suit by jumping into a pond to save a drowning person, why not give away the financial equivalent of the suit by donating it to the poor? Singer’s career has been dedicated to expanding our circle of empathy in the hope of making moral progress.

I find the idea admirable, but wonder how much it comes from not seeing suffering on your doorstep. It’s easy to imagine someone in Oxford being principally concerned with people 5,000 miles away because their life is so placid. Would they have the same concerns if they lived somewhere without dreaming spires like Glasgow?3

Perhaps I lack compassion compared with EA advocates. This story about Derek Parfit from Larissa MacFarquhar, via Slate Star Codex, is striking in this regard:

When I was interviewing [Parfit] for the first time we were in the middle of a conversation and suddenly he burst into tears. It was completely unexpected, because we were not talking about anything emotional or personal, as I would define those things. I was quite startled, and as he cried I sat there rewinding our conversation in my head, trying to figure out what had upset him. Later, I asked him about it. It turned out that what had made him cry was the idea of suffering. We had been talking about suffering in the abstract.

Or check out the dating profile of Brian Tomasik for an insight into Utilitarian psychology:

I'm troubled by the suffering of bugs around the house, and I try to euthanize injured or dying bugs, typically by putting them into a container in the freezer. While I wouldn't expect a romantic partner to actively care for bugs in this way, I would be emotionally upset by deliberate swatting, crushing, drowning, etc of bugs.

Compassion is learned and these philosophers seem to have thought their way into these intense feelings rather than being born with such sensitivities. Richard Rorty’s argues that Dickens’ novels opened people’s eyes to suffering far better than more practical campaigns and helped achieve moral progress. However, for a movement that wants to do the most good possible, departing from moral intuitions can be alienating, as I’ve found whenever I’ve talked about EA with normies.

X-Risk

Toby Ord’s The Precipice advances the debate by introducing the dimension of time. For instance, you may save a thousand lives by funding a food programme, but if you do research into vaccines you could potentially save millions of lives over a century.

But why be content with a mere century? The sun won’t make the earth uninhabitable for another billion years. If humanity gets wiped out now, we would lose trillions of lives that might be born as we colonise the universe.

This is the idea that, as well as thinking about people currently living, we have a moral duty to consider the people yet to come. Since the first atomic bomb was detonated on 16 July 1945 we have been subject to existential risks (X-risks), that is, the potential for humanity to wipe itself out. For Ord, the loss of the human species would be a catastrophe and he thinks that we urgently need to reprioritize research to ensure we deal with natural and human-created threats.

Here is his estimate of the chance of various existential catastrophes happening in the next hundred years.

According to Ord and the EA community, the greatest threat to humanity is unaligned artificial intelligence, which apparently has a 10% chance of wiping us out over the next century. We are fast approaching the Singularity, where supercomputers can outsmart humanity in any field. What if the computer decides that we are surplus to requirements? How can we prevent the universe from being turned into a paper-clip factory?

By this stage, we are so abstracted from ordinary human suffering that we are simply arguing over numbers in a spreadsheet. It seems awfully convenient that EA nerds can stop worrying about structural problems like how to deal with homelessness and can just mess around on computers all day running weird thought experiments. It all seems a long way from the original focus of fixing present-day dystopias.

If you are interested in reading one of the canonical EA books you can get a free copy via this form.

Responding to feedback

One of the reasons I started this blog is to improve my thinking and identify any intellectual blindspots. Once you publish your thoughts you have ‘skin in the game’ and an extra incentive to be accurate. As such, I invited members of the ACX meetup4 in Edinburgh — where there is a crossover with the EA movement — to read my post above and got some good feedback, which I thought deserved a response.

“They ignore the influence of Peter Singer and Derek Parfit (Toby Ord’s doctoral advisor), whose utilitarian thought experiments have woken many people out of their moral slumber, and focus on the institutions they’ve created.”

This sentence is pretty misleading. The linked website is just a short summary. EAs are very aware of the influence of Parfit and Singer. Of people who read their books for fun, I would guess >50% are somehow involved in effective altruism. Both have spoken at EA conferences.

I think this is a fair enough criticism — people in EA community do know about Parfit and Singer. What I was trying to express was that EA doesn’t get bogged down in philosophical debates and is a practical movement. This is good!

Would they have the same concerns if they lived somewhere without dreaming spires like Glasgow?

It makes sense to me that a movement partially about the importance of distant people would start in a more prosperous city. Note though that Will MacAskill is from Glasgow!

This is true and leads inevitably to debates over how much EA should focus on structural issues versus emergency aid. I did mention that MacAskill is from Glasgow in a footnote, although read the opening chapters of Darren McGarvey’s Poverty Safari for an insight into how separated communities in Glasgow can be. When McGarvey first went to the West End of Glasgow he couldn’t believe that people could walk around so freely without worrying about being threatened.

“It seems awfully convenient that EA nerds can stop worrying about structural problems…

Also, EA spends 3x on global health as it does on existential risk. There are probably less than 100 people in the entire world working on x-risk from AI full time

This is a very good point and it is notable that Giving What We Can put global health above x-risk on their website. That said, AI alignment seems to be a lot more exciting a topic as I see every time I download a Lunar Society podcast.

I assume you meant ‘effect’ not ‘affect’ in the title?

The original title was ‘The Alienating Affect of Effective Altruism’, with affect is used as a synonym for ‘feeling’. On reflection, perhaps it is slightly confusing and a pretty bad pun. Titling things is hard.

"If EA had stopped there and spent their time helping improve the lives of the poorest humans on the planet, I think they would be hailed as heroes."

This made me laugh: plenty of people have written long objections to EAs that complain about nothing other than trying to use reasoning to help the lives of the poorest humans. This is a representative example: I think that the attacks aren't as memetically effective as the current "they only care about AI, which is dumb" critique, but complaints of the form

It's not promoting communist revolution, and thus not really systemic and ultimately ineffective

Not focusing on your local community is morally bad, actually

Global development work is inherently too suspect to put money or effort into

EA will drive lower donations by over-focusing on rational reasons instead of the emotions that connect with donors.

But if we all do the best thing, nobody will do the other things, and is life worth living then? These people don't actually understand marginal utility, but the critique still gets made.

are things that I became very well acquainted with.

I think this response is worth including here to show that EA people are aware of these critiques and making them isn’t perhaps as devastating as one might think.

I would like to see how much crossover there is between those who debated atheism online in the noughties and the EA movement. Sam Harris, one of the four horsemen of New Atheism, recently pledged to give 10% of his income through the EA-associated organisation, Giving What We Can.

Something that has erroneously caused people to think that a life can be saved for $10

Although, apparently William MacAskill previously William Crouch, is from Glasgow, although his accent is quite hard to place.

This meetup is organised by Sam Enright who runs the excellent blog The Fitzwilliam.

Another thought provoking article, although much of it seems beyond the computing power of my brain! Keep it up!